A good peer review experience with Moodle Workshop

Dr Mira Vogel follows up on the previous Moodle Workshop activity.

6 August 2015

Workshop is an activity in Moodle which allows staff to set up a peer assessment or (in our case) peer review.

Workshop:

- collects student work

- automatically allocates reviewers

- allows the review to be scaffolded with questions

- imposes deadlines on the submission and assessment phase

- provides a dashboard so staff can follow progress, and

- allows staff to assess the reviews/assessments as well as the submissions.

However, except for some intrepid pioneers, it is almost never seen in the wild.

The reason for that is partly to do with daunting number and nature of the settings – there are several pitfalls to avoid which aren’t obvious on first pass – but also the fact that because it is a process you can’t easily see a demo and running a test instance is pretty time consuming. If people try once and it doesn’t work well they rarely try again.

Well look no further – UCL Arena Centre and Digital Education have it working well now and can support you with your own peer review.

What happened?

Students on the UCL Arena Teaching Associate Programme reviewed each others’ case studies.

22 then completed a short evaluation questionnaire in which they rated their experience of giving and receiving feedback on a five-point scale and commented on their responses.

The students were from two groups with different tutors running the peer review activity. A third group leader chose to run the peer review on Moodle Forum since it would allow students to easily see each others’ case studies and feedback.

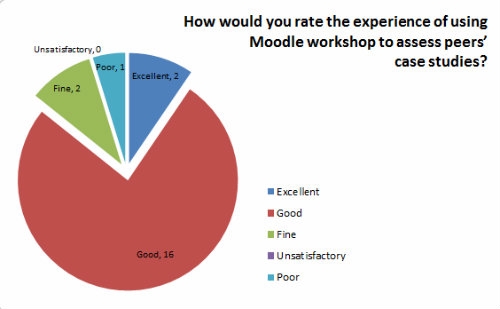

The students reported that giving feedback went well (21 respondents):

How would you rate the experience of using Moodle Workshop to assess peers' case studies?

- 2: excellent

- 16: good

- 2: fine

- 1: poor

This indicates that the measures we took – see previous post – to address disorientation and participation were successful.

In particular we were better aware of where the description, instructions for submission, instructions for assessment, and concluding comments would display, and put the relevant information into each.

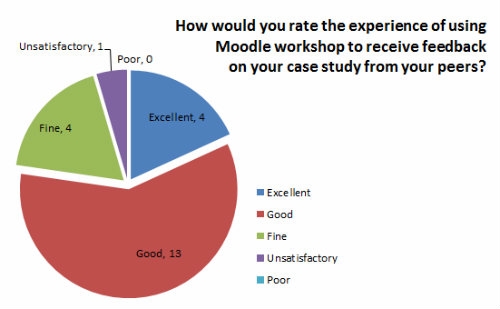

Receiving feedback also went well (22 respondents) though with a slightly bigger spread in both directions:

How would you rate the experience of using Moodle Workshop to receive feedback on your case study from your peers?

- 4: excellent

- 13: good

- 4: fine

- 0: poor

- 1: unsatisfactory

Students appreciated:

- Feedback on their work.

- Insights about their own work from considering others’ work.

- Being able to edit their submission in advance of the deadline.

- The improved instructions letting them know what to do, when and where.

Staff appreciated:

This hasn’t been formally evaluated, but from informal conversations I know that the two group leaders appreciate Moodle taking on the grunt work of allocation.

However, this depends on setting a hard deadline with no late submissions (otherwise staff have to keep checking for late submissions and allocating those manually) and one of the leaders was less comfortable with this than the other.

Neither found it too onerous to write diary notes to send reminders and alerts to students to move the activity along – in any case this manual messaging will hopefully become unnecessary with the arrival of Moodle Events in the coming upgrade.

For next time:

- Improve signposting from the Moodle course area front page, and maybe the title of the Workshop itself, so students know what to do and when.

- Instructions: let students know how many reviews they are expected to do; let them know if they should expect variety in how the submissions display – in our case some were attachments while others were typed directly into Moodle (we may want to set attachments to zero); include word count guidance in the instructions for submission and assessment.

- Consider including an example case study & review for reference (Workshop allows this).

- Address the issue that, due to some non-participation during the Assessment phase, some students gave more feedback than they received.

- We originally had a single comments field but will now structure the peer review with some questions aligned to the relevant parts of the criteria.

- Decide about anonymity – should both submissions and reviews be anonymous, or one or the other, or neither? These can be configured via the Workshop’s Permissions. Let students know who can see what.

- Also to consider – we could also change Permissions after it’s complete (or even while it’s running) to allow students to access the dashboard and see all the case studies and all the feedback.

Close

Close