Once a product has been prototyped it is important that it is rigorously tested and evaluated prior to going into production to ensure that it can accomplish the task it is designed to perform. This process is a refining process, as in most cases, multiple versions need to be developed to improve performance, reliability, and repeatability. Within this phase there are a number of human factors methods that can help identify potential errors and usability concerns, including cognitive walkthroughs, heuristic evaluations, usability studies, user experience evaluations, and ‘in the wild’ testing. Cognitive walkthroughs and heuristic evaluations are expert evaluations, meaning, they are conducted by human factors experts within the domain of healthcare to provide feedback and solutions for potential user errors. Usability, user experience, and ‘in the wild’ evaluations allow for evaluation on the user’s ability to perform tasks and understand if the healthcare product is usable, useful, and safe to the user as well as satisfying. The test and refine stage is an iterative phase, allowing for changes in the design to continue until the new technology meets the user’s needs.

In this section each of the test and refine methods are expanded upon, highlighting what the method is, and why, when, and how they can be used.

- Cognitive Walkthrough

Conducted by a human factors expert to review the system to identify potential use errors.

What and Why

A cognitive walkthrough is an evaluation method to identify how easily tasks can be performed by users. However, unlike usability studies, a cognitive walkthrough is best conducted by a human factors expert rather than observing participants perform tasks. If the person conducting the cognitive walkthrough is too familiar with the product, they may potentially miss significant issues. Essentially, it requires the evaluator to put themselves in the shoes of the potential user. Using personas will greatly assist to achieve this.

When

The cognitive walkthrough is best conducted during the early design stage, prior to user testing and can even be conducted based on sketches of the product. This allows the expert to identify key issues that still require attention before conducting formal testing with participants.

How

A set of tasks for the user to examine are required to conduct a cognitive walkthrough. The tasks can be grouped to focus on a specific high-level task. For example: setting up the product, using it to capture information, retrieving information from it, etc. For each task, the evaluator works through the steps of the task, asking four questions (see below) about the likely user thinking for that step and the feedback they receive from the product. The evaluator identifies potential user difficulties and errors through this process.

Pros

Cost effective, quick to run, and no recruitment required for the process.

Cons

Sometimes difficult to put yourself in the shoes of your participants.

Points to Ponder (the four questions)

Will the user try and achieve the right outcome based on the information you have?

Is it apparent what the next step is based on the last action?

Is there a correlation between the action you are performing and the outcome you expect to achieve?

When a correct action is taken, is there some indication that the user is heading towards achieving their goal?Resources

For further understanding on conducting a cognitive walkthrough, click here (10 min read time).

- Heuristic Evaluation

An evaluation conducted by human factors experts to identify potential usability issues that intended users may encounter when interacting with a product’s user interface.

What and Why

Heuristic evaluation is a method that allows human factor experts to measure the usability of a user interface based on a predefined list of rules of thumb (the heuristics). There are a number of heuristics that can be used to evaluate a user interface including Nielsen’s, Shneiderman’s, Gerhardt-Powals’, and Connell and Hammond’s. The heuristics have been designed to cover the areas that users typically have the most difficulty with; evaluating the product’s user interface against a set of known areas of concern during the design phase can help improve the user’s experience with the product. Nielsen’s 10 usability heuristics are a good guideline when developing user interfaces and cover: visibility of the system; match between system and the real world; user control and freedom; consistency and standards; error prevention; recognition rather than recall; flexibility and efficiency of use; aesthetic and minimalist design; recognise, diagnose, and recover from errors; and help and documentation. More detail on these 10 heuristics can be found on the NN/g website (10 min read).

When

Like Cognitive Walkthrough, a heuristic evaluation can be conducted at multiple times during the course of development, and as early as sketch prototypes of the user interface. This allows obvious issues to be discovered from low-fidelity prototypes to more nuanced issues that can only be found with a high-fidelity user interface.

How

A heuristic evaluation can be conducted using the following seven steps.

1. Decide on which set of heuristics best suits your user interface. Additional heuristics may also be included, depending on the product being developed.

2. Work with healthcare usability experts and not potential users. It is recommended that multiple experts, ideally five, are used to ensure that all aspects of the user interface are properly evaluated.

3. Briefing session. Ensure that those evaluating the user interface know what is required of them and which aspects of the user interface you want them to evaluate. The information provided to each evaluator should be the same so as not to introduce bias into the evaluation.

4. Begin the first phase. This phase is exploratory, allowing the evaluators time to understand the interactions and scope of the product. Then, as experts, they can decide on which elements of the heuristic they want to focus on. Ideally each evaluator will work independently of the other evaluators during this phase.

5. Begin the second phase. This phase is for the evaluators to apply the heuristics to the user interface they are evaluating.

6. Record the findings. This can be done in one of two ways; firstly, an observer may be used to take notes and possibly record the interactions of the evaluator. Secondly, the evaluator can be left to make their own notes. Having an observer present requires coordination between parties; however, an observer reduces the time required to analyse the data as this can be done on the fly with no need to interpret the evaluator’s decisions.

7. Debriefing session. This time is for the evaluators to come together and synthesise their findings to create a complete list of problems. This phase also demonstrates why using usability experts, rather than potential users, is important as the evaluators are not only asked to define usability issues, but also recommendations on how to address the usability concerns. The report should list all identified problems, explain why they are an issue, depict the heuristic that each problem relates too, and provide suggestions to improve the usability of the product.Pros

Evaluators have the freedom to focus on heuristics they are familiar with. The evaluation can be conducted at the early stages of development. Not only are usability concerns identified, but potential solutions are also provided from healthcare usability experts.

Cons

It can be difficult to find enough domain specific usability experts to evaluate and cover all areas of the heuristics. Some evaluators, who may not be familiar with healthcare, may overshoot the mark and identify issues that would not usability issues for the intended users (e.g., terminology).

Points to Ponder

What aspect of the user interface should be evaluated?

Do the heuristics you are using cover all elements of usability for your user interface?

Are the evaluators familiar with healthcare products and user interfaces?

When in the project is the best time to conduct a heuristic evaluation?Resources

For further information on understanding heuristic evaluation and additional tips, click here (5 min read plus 8 min video). For more details on how to conduct a heuristic evaluation, click here (12 min read).

- Usability Study

Identify problems with your product; Discover opportunities to improve the product.

What and Why

A usability study evaluates a participant’s ability to use a product or service by observing them. The participants should be representative of those who would normally use the product to determine how effective and efficient it is to use, how easy it was to learn to use the product, and to identify key areas of concerns that participants have with the product. Doing so provides strong evidence of how close the product is to being ready for deployment, and can also identify whether the product is providing the right service to the user to establish its utility. The output of a usability study includes both qualitative (the users’ opinions of the product including likes, dislikes, and desired features) and quantitative results (successful tasks, completion times, error rates, etc.).

When

Conducting a usability study early on during the development stage allows for issues to be identified and solutions to resolve them to be applied while the cost of implementation is low. In Figure 3, the usability study is shown as being conducted as soon as a working prototype is developed, and in some cases the prototype can be very low fidelity. For example, if you were developing a health application on a mobile device, a wireframe model (basic sketches of the skeletal framework of a website) evaluation could identify the same issues as a completed app, but the cost to adjust a wireframe is must less than having to reconfigure a completed application. For some products, multiple usability studies might be required to be run at different points along the product development path to evaluate different aspects of the product.

How

The format to conduct a usability study should be well structured, and planned well in advance to allow for piloting the study. This ensures you are able to capture the information that is most important for you to progress in the development of the product. A set of tasks you wish the participant to complete should be constructed, as well as a task scenario to accompany each task. The tasks could be independent of each other or sequential in nature; either way, the tasks used should be those that have been identified as being most common, critical or important, or having been previously conducted incorrectly.

Understanding the users’ thought processes during the usability test is paramount in identifying points of frustration, misinterpretation, and opportunities for improvement. To achieve this a ‘Think Aloud’ method is commonly adopted. This method invites the users to provide a continuous verbalisation of their thought processes, detailing what they are thinking, and describing difficulties they may be having when performing the task (e.g., not knowing where to click to submit a form on a website). Think Aloud can be conducted concurrently with the task or retrospectively. A retrospective Think Aloud may be best suited in particular healthcare settings when observing the user perform an action in a setting where they cannot verbalise their thoughts at the time; such as interacting with a patient or performing a task that requires a significant amount of cognitive effort. In these instances, having the user conduct a retrospective think aloud while watching a video playback can deliver similar results. However, the shorter the time between the recording and the playback the higher the accuracy. Alongside Think Aloud, ‘Probing’ is another technique that can be carried out, where the researcher may ask follow-up questions when they identify something they have not seen before or based on something the participant expresses that they would like to explore further. Again, probing can occur either concurrently with the task or retrospectively.

The method by which you wish to capture the users’ actions should also be considered, these could include: sound recording, screen recording, participant recording, action tracking, gesture tracking, gaze tracking, or any combination of these.

A usability test should consist of the following basic plan:

1. Welcome the participant and explain the purpose of the usability study.

2. Explain to the user how you are going to capture their thought processes (Think Aloud and/or probes, and either concurrent or retrospectively).

3. Remind the participant that you are testing the product not them.

4. Give them a task scenario and have them start the task (take good notes during the study as it is easier to take notes, rather than trying to recall the study at a later time).

5. Continue the tasks until either they are all completed, or the allotted time has ended, as this can also indicate the difficulty of the tasks.

6. At the end of the session you may want to provide an exit survey (such as a System Usability Scale (SUS)), to identify users’ initial perceptions of the device, thank the participant and provide any agreed incentive.

It is important when conducting a usability study that as a researcher you remain neutral, that is, do not provide opinions during the study and try to avoid providing assistance to the participant when they are getting frustrated. If the participant decides to give up or asks for assistance you can either end the scenario or provide minimum assistance. The reason you may wish to provide assistance is when the following task scenario is dependent on the participant completing the current task scenario or they are only on step 2 of 10 and you want to assess whether all steps are as hard as this particular step.Pros

Good insight into product usability, engage with representative users, ability to identify issues early on in the product development.

Cons

Time consuming, difficult to recruit representative participants, difficult to engage participants in the study.

Points to Ponder

Do you have the right type of participants?

Are the tasks the participants are carrying out the most critical?

How are you going to analyse this information?

Have you tested your equipment to know it works correctly and you are capturing the information that is important to you?Resources

For additional resources on conducting a usability study, click here (15 min read). For an in depth understanding of usability analysis, its history, why it’s important, and the development of usability evaluation, click here (90 min read).

- User Experience Evaluation

Understand users’ reaction to the product and identify their pleasure and satisfaction when using it.

What and Why

User Experience (UX) evaluation may appear similar to a usability study; the difference is that a usability study focuses on effectiveness and efficiency whereas a UX evaluation evaluates the user perspective of the interaction with a product over time. From this we are able to understand the nuances of the product and the users’ satisfaction with it. Therefore, UX builds on usability.

When conducting a UX evaluation you are exploring the following questions; How enjoyable is the product to use? Is it helpful? Is it a pleasure to use? Is it engaging? Does it look good? Does it add value to the lives of the users? Through finding answers to these questions, you will be able to determine whether the product is something that users will want to use or further refinement in the design is required.When

As UX evaluation builds from usability, so it should be run after you have refined the product through usability testing. Unlike a usability study, a UX evaluation should be run over a set period of time or number of interactions with the product. Depending on the product being developed, these evaluations may take place in a lab setting (e.g., patient monitoring system) or participants may be able to test it at their homes (e.g., blood glucose measuring device). The method used to conduct the UX evaluation should reflect the location where users will be able to assess the product.

How

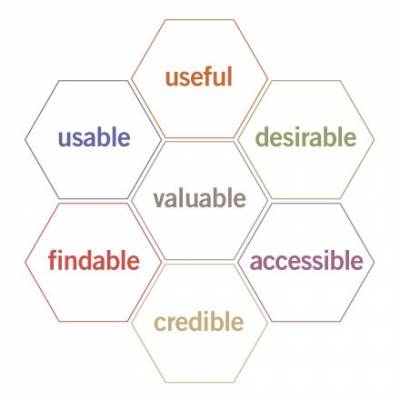

The User Experience Honeycomb is an evaluation tool for UX, see Figure 6. The honeycomb enables researchers to ensure that the fundamentals of UX are addressed, which are: useful, usable, desirable, findable, accessible, credible, and valuable (see UX Honeycomb for more details on each of these). To understand these needs you will need to adopt a longitudinal data collection technique, that is, something you can leave with your participants to capture their impressions of the product after using it. These investigations should be designed so that as many areas of the honeycomb can be considered and evaluated.

Honeycomb model Peter Morville (2004)

Longitudinal data collection techniques are designed to capture user’s thoughts, feelings, and actions when using the product. Typical modes of collecting this data include diary studies, card sorting, interviews, or surveys. Depending on the product, users, and timeframe, each of these modes have their pros and cons. Diary studies allow the users to record their own thoughts (either written or voice recording) and take photos (if able) of interactions they want to share. Card sorting activities vary, but typically include a set of predefined cards that a user can arrange in a prescribed way to provide insights. Interviews and surveys are classic means for researchers to gain insights. Within healthcare, set surveys may be the easiest method to incorporate; however, diary studies, although taking more effort to both prepare and for users to undertake, deliver better insights.

In short, longitudinal data study could be structured as:

• Use a method that best suits the users and the location where the evaluation can be conducted

• Ensure the method allows users to capture as many aspects of the UX honeycomb as possible

• Provide clear instructions to the users on how you wish them to engage with the method

• Let the users know the duration of the study, and when or how often you want them to evaluate the product

• Send gentle reminders to users to record their thoughts, feelings, and actions using the method you have provided

• At the end of the evaluation thank the user and provide any agreed incentivePros

Identify users’ desire to use the device; highlight key areas that need improvement.

Cons

Some users may give poor feedback, UX is subjective, and over time users may ‘go through the motions’ of providing information. Typically, a large data set and depending on the method used may require transcribing, which is time consuming.

Points to Ponder

Under what conditions will this product be evaluated?

Does my method allow me to fully understand all facets of UX?

How long does the evaluation need to run for?

How many users do you need to understand the UX?Resources

For examples on how user experience has shaped design, click here (25 min read).

- 'In the Wild' testing

In the wild testing, also referred to as naturalistic observation, goes beyond user experience testing, as it evaluates the product being used within its designed context. During in the wild testing users are evaluated interacting with the product as they see fit, which can lead to identifying unforeseen issues by the development team.

What and Why

In the wild testing involves carrying out a Usability study and User Experience Evaluation when the product is being used in the context it was designed for by the intended users. It is important that in-field testing is carried out prior to moving towards the market as it allows the regulatory design requirements to be evaluated and to identify any unforeseen issues to be captured and their risk determined. This may include the product not being used as intended or being used in a manner it was not designed for, identify unknown constraints, and understand how the introduction of the product will modify existing workflows and task distribution. “Examples of the need for Human Factors” highlight issues that in-depth ‘in the wild’ studies should have been able to identify, thus allowing design changes to be conducted to improve the product’s ability to perform its task and improve user satisfaction and safety.

When

In the wild testing is the last formal test carried out by researchers before moving to mass production and can provide a summative evaluation of the product. During this testing, unforeseen challenges may become apparent that could not be picked up in usability and user experience evaluations conducted in non-contextual environments. Therefore, carrying out these studies again but being used in the environment they were developed to be used in is essential. The duration of testing also needs to be taken into consideration to ensure that enough data is captured to ensure a realistic evaluation of use is captured. For example, when you have designed a new dialysis machine than this product may only be used every couple of days, so, the time frame may be dependent on having the user perform dialysis a set number of times. However, if the product being developed helps take common patient bedside readings, such as temperature, heart rate, oxygen saturation levels, etc., then an evaluation period of a week may be sufficient.

How

In the wild testing involved reconducting usability and user experience evaluations, with the main difference being the location and the users. To ensure that all possible outcomes are understood, evaluation of the product should be conducted by as many participants as possible at varying locations. For example, a device to be used by neurosurgeons should ideally be tested at a number of hospitals by both senior and junior surgeons. Similarly, a product developed for in-home use should be evaluated by different households and trying to capture a wide range of users.

Pros

Understand how the product works in its true environment, identify unknown constraints and exactly how the introduction of the product modifies existing workflows and task distribution.

Cons

Difficult to manage data collection and (depending on the product) may be difficult to conduct evaluation studies. The studies will also generate a large data set, which will need to be analysed and assessed.

Points to Ponder

Does the product impede existing practices?

How many locations and users are required to properly evaluate the product?

Is the product being used as intended?Resources

For additional information on the pros and cons of ‘in the wild’ testing, read the section on Naturalistic Observation here (2 min read). To better understand the importance of ‘in the wild’ testing, click here (3 min read).

Close

Close