Human computer interfaces (HCI) focuses on the ways humans interact with computers and other technology. This includes a variety of tasks such as recognizing handwriting and gestures.

There are a variety of ways humans interact with modern technology. In addition to traditional input devices such as keyboards we often want to input data or control devices using our natural ways of communication such as handwriting, speech and gestures. In contrast to traditional input devices, which have been designed to communicate with machines and thus create a structured data stream, these human ways of communication form very complex multimodal data streams which are much more difficult to interpret by a machine. Some examples of such data streams are

- The path of a finger or pen on a screen

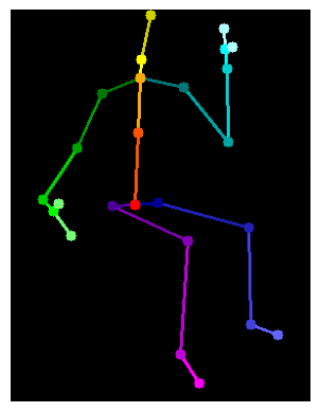

- Landmark data, a collection of points representing the position in space of the major joints of e.g. the hand or the whole human body

- Accelerometer data

In our research we combine tools from rough path theory and modern machine learning to develop robust methodologies to make sense of these data streams to improve the ways we are able to interact with machines around us.

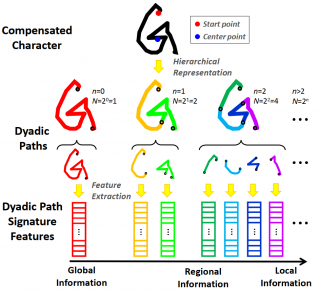

Handwriting

An example of an application of the signature in combination with deep learning to recognise handwriting is the gPen smartphone app developed by South China University of Technology, which converts Chinese characters drawn onto the phone screen into printed text. To achieve this it uses a 3-dimensional input stream, consisting of the x and y coordinates of the pen/finger on the screen and the pen on/off action. The app is widely used by people in China and has recognised billions of characters. [1]

Gesture & Action recognition

Gestures and movement in general provides a very natural way to interact with a machine with a wide range of use cases from simple commands such as skipping songs with a swipe in the air to very complex interaction such as gaming without the need for any form of controller such as with the Microsoft Kinect. To allow the machine to perceive and understand human movement there are different data types one can make use of. One possibility is the use of landmark data, a collection of points describing the spacial position of the major joints of the human body or parts of it such as a single hand. This kind of data can be obtained using specialised hardware such as the Microsoft Kinect or using modern deep learning techniques for pose estimation which estimate the joint positions in either 2D or 3D from standard RGB video. Another type of data that allows to capture human movement and is easy to capture is accelerometer, such as are included in modern fitness tracking devices. These devices are small and lightweight and can easily be worn without obstructing the user.

Close

Close