Action and Perception Lab

In the Action and Perception Lab we examine a variety of questions relating to action and perception, and often the link between these domains. We use a range of methods, including psychophysics, functional magnetic resonance imaging, electroencephalography, magnetoencephalography and computational modelling. Below are some examples of the questions we ask.

The online influence of action on perception. Effectively acting on the world around us requires accurately predicting the consequences of our movements. We select actions based on their predicted outcomes and use these predictions to generate rapid corrective movements when we experience deviant sensory input. There is a range of evidence demonstrating that these predictions change the percept itself. We examine, with psychophysical and neural techniques, how these predictions help to shape our percepts during action, to render veridical percepts in the face of much internal and external sensory noise and a requirement to generate percepts rapidly.

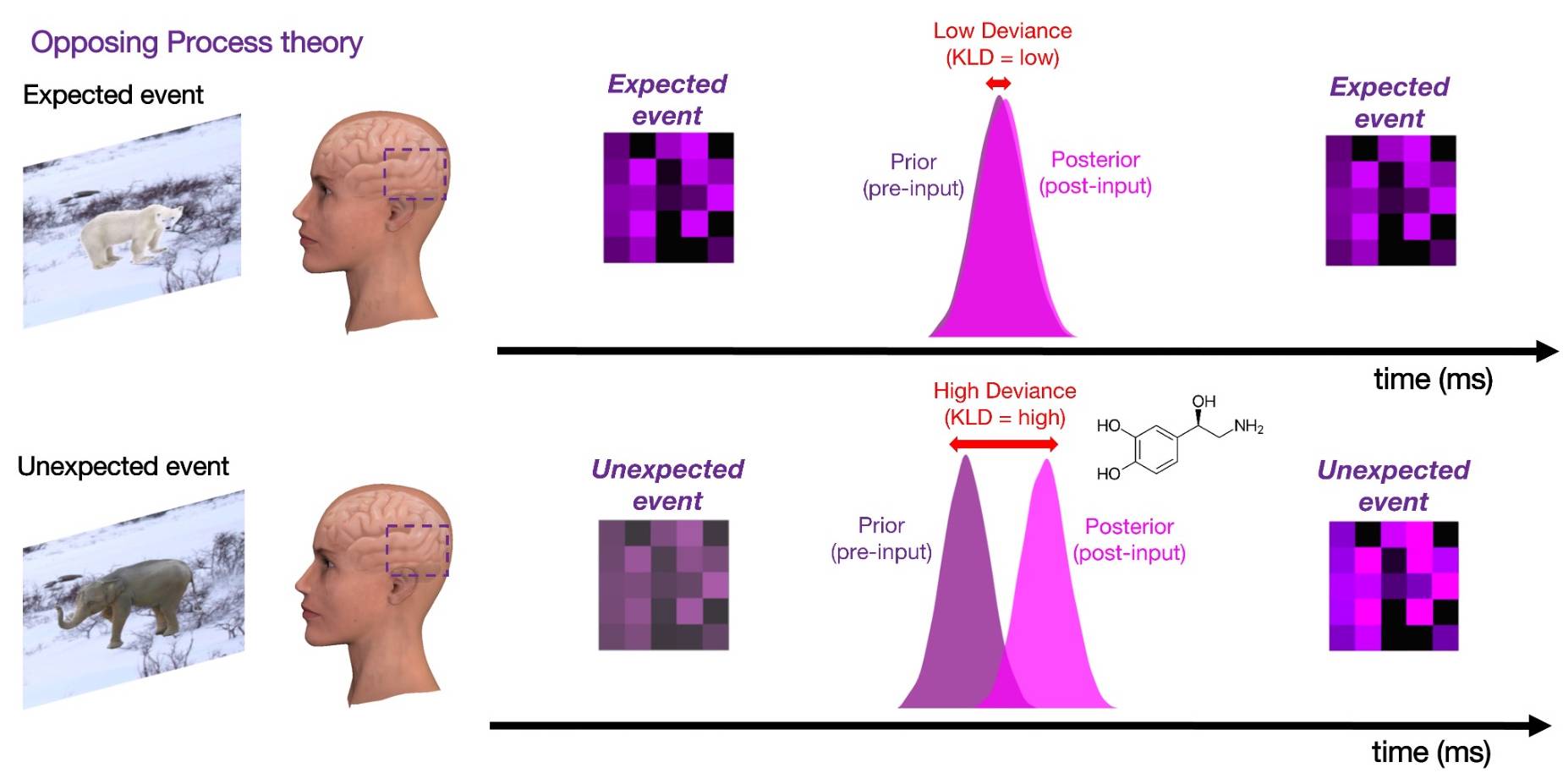

Influences of expectation on perception. Given work in the stream above, we have also been considering how to marry current theories from action domains and other sensory cognition domains concerning how expectation influences perception. Specifically we have recently proposed a new theory of how we might render our perceptual experiences both veridical and informative via our expectations.

Such work aims to consider the broad interdependence between learning and perception – how we use our learnt knowledge to shape perception in order to yield it useful for future learning. We are using a range of computational and neuroimaging (MEG and 7T fMRI) methods to answer these questions. The project is funded by an ERC Consolidator grant that runs until 2027.

Oscillatory processing. We are testing a new account of how the oscillatory rhythm of sensory sampling is determined. Specifically, our sensory systems are bombarded with a continuously-changing stream of noisy information. Given our limited capacity systems, we cannot process every sight and sound perfectly. Organisms face a monumental challenge in determining what to sample from this dynamic signal and generating useful percepts from these sensory inputs. Intriguingly, different perceptual disciplines – vision (action and object perception) and audition (speech perception) – have proposed contrasting accounts of how we achieve this feat. We believe that these contrasting theories have emerged via a focus on different adaptive functions of perception, that in fact apply equivalently across domains. We therefore propose to establish how we really sample our environment by cross-pollinating these ideas across disciplines and testing a novel idea resulting from the direct comparison. This project is funded by a Leverhulme Trust project grant that runs until 2026.

Close

Close