Astrophysics Group, Department of Physics and Astronomy

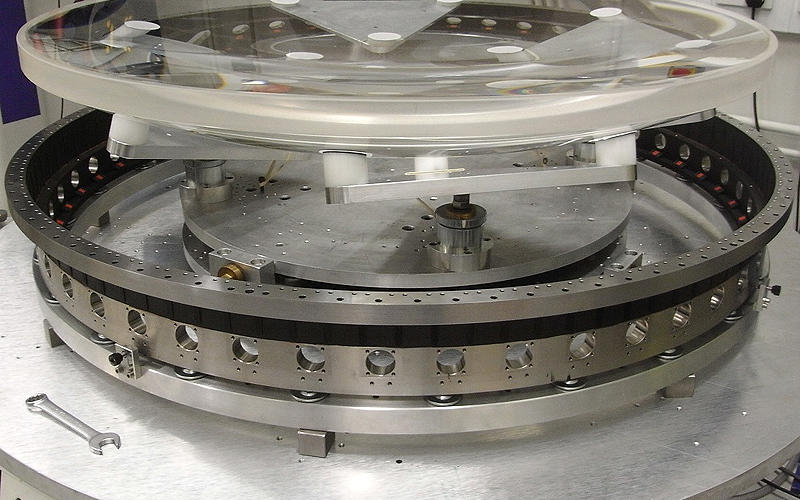

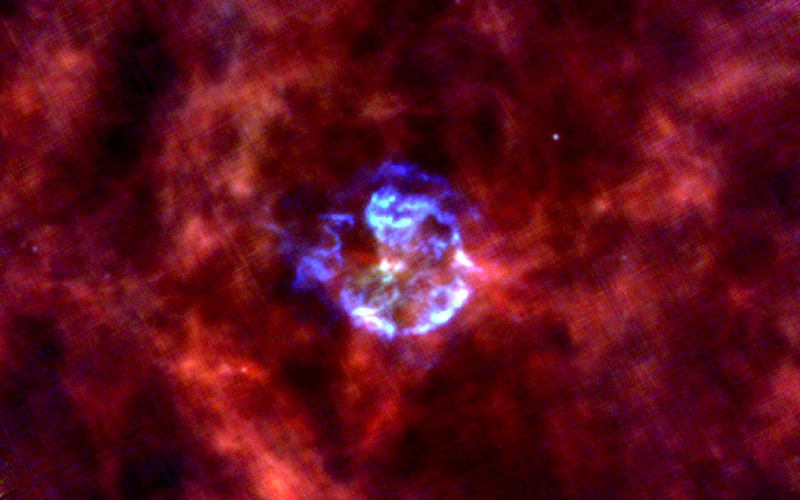

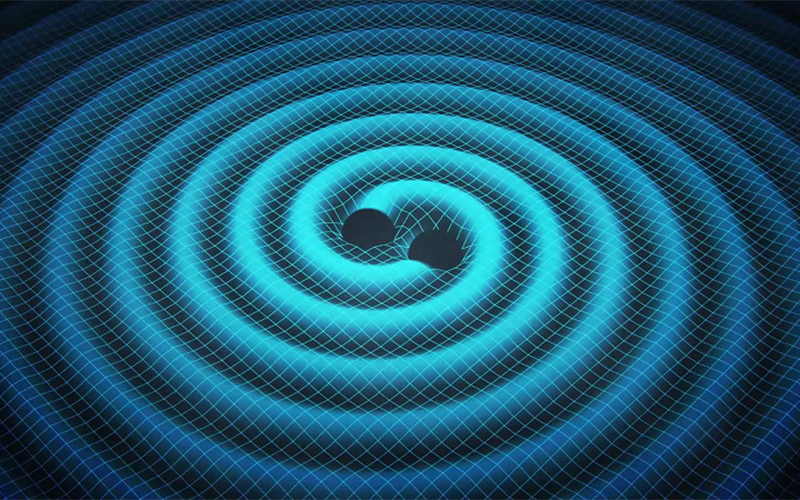

Our group members are playing leadership roles in many international projects, covering a time line from the present to 2030 or so, which include the Dark Energy Survey, DESI, 4MOST, Ariel, Euclid, JWST, LSST, Subaru PFS, ALMA, JUICE, SKA, LISA, the Hubble Space Telescope, Cassini, LOFAR, Planck, Herschel, e-MERLIN, Kilo Degree Survey and WEAVE.

Our group is part of the Physics and Astronomy Department at UCL. An overview of the group's research can be found in the most recent Departmental Annual Review.

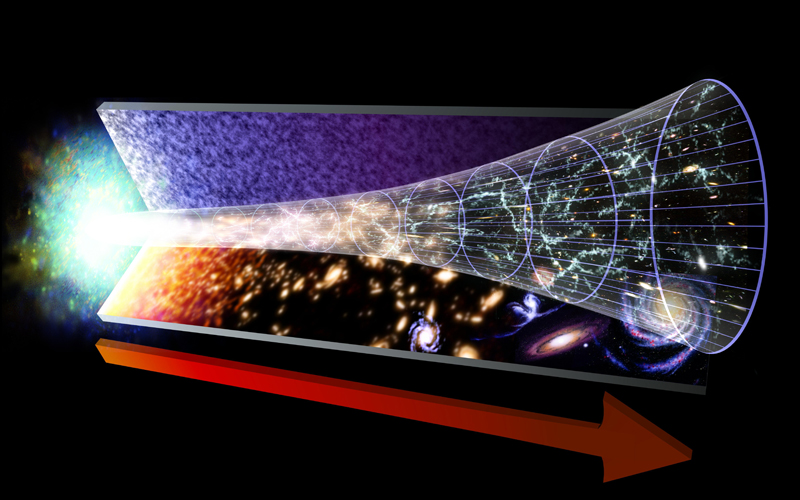

The five main research sub-groups can be found below. The group also supports a number of centres and hubs: Cosmoparticle Initiative (CPI), Centre for Space Exochemistry Data (CSED), Centre for Planetary Sciences (CPS) and Centre for Data Intensive Science and Industry (CDT DIS).

Physics and Astronomy have achieved a Juno Champion Award from the Institute of Physics (IOP). The Project Juno recognises and celebrates good employment practices for gender equality and an equitable working culture. UCL has a Silver Athena SWAN award from the Equality Challenge Unit (ECU), and a Bronze award under ECU’s new Race Equality Charter for higher education.

UCL's Equality, Diversity and Inclusion strategy aims to foster a positive cultural climate where all staff and students can flourish, where no-one will feel compelled to conceal or play down elements of their identity for fear of stigma. UCL is a place where people can be authentic and their unique perspective, experiences and skills seen as a valuable asset to the institution.

Close

Close