- Introduction

- 1. Introduction to Quantitative Analysis

- 2. Descriptive Statistics

- 3. T-test for Difference in Means and Hypothesis Testing

- 4. Bivariate linear regression models

- 5. Multiple linear regression models

- 6. Assumptions and Violations of Assumptions

- 7. Interactions

- 8. Panel Data, Time-Series Cross-Section Models

- 9. Binary models: Logit

- 10. Frequently Asked Questions

- 11. Optional Material

- 12. Datasets

- 13. R Resources

- 14. References

- Published with GitBook

5. Multiple linear regression models

5.1 Seminar

Setting a Working Directory

Before you begin, make sure to set your working directory to a folder where your course related files such as R scripts and datasets are kept. We recommend that you create a PUBLG100 folder for all your work. Create this folder on N: drive if you're using a UCL computer or somewhere on your local disk if you're using a personal laptop.

Once the folder is created, use the setwd() function in R to set your working directory.

| Recommended Folder Location | R Function | |

|---|---|---|

| UCL Computers | N: Drive | setwd("N:/PUBLG100") |

| Personal Laptop (Windows) | C: Drive | setwd("C:/PUBLG100") |

| Personal Laptop (Mac) | Home Folder | setwd("~/PUBLG100") |

After you've set the working directory, verify it by calling the getwd() function.

getwd()

Now download the R script for this seminar from the "Download .R Script" button above, and save it to your PUBLG100 folder.

library(foreign)

library(Zelig)

library(texreg)

library(dplyr)

rm(list = ls())

Loading, Understanding and Cleaning our Data

Today, we load the full standard (cross-sectional) dataset from the Quality of Government Institute. This is a great data source for comparativist political science research. The codebook is available from their main website. You can also find time-series and cross-section data sets on this page.

Download the dataset from the links below:

Download 'Quality of Government' Dataset

# load dataset in Stata format

world_data <- read.dta("qog_std_cs_jan15.dta")

# check the dimensions of the dataset

dim(world_data)

[1] 193 2037

Dplyr select()

The dataset contains a huge number of variables. We will therefore select a subset of variables that we want to work with. We are interested in political stability. Specifically, we want to find out what predicts the level of political stability. Therefore, political_stability is our dependent variable (also called response variable). We will also select an ID variable that identifies each row (observation) in the dataset uniquely: cname which is the name of the country. Potential predictors (independent variables) are:

lp_lat_abstis the distance to the equator which we rename intolatitudedr_ingis an index for the level of globalization which we rename toglobalizationti_cpiis Transparency International's Corruptions Perceptions Index, renamed toinst_quality(larger values mean better quality institutions, i.e. less corruption)

Our dependent variable:

wbgi_psewhich we rename intopolitical_stability(larger values mean more stability)

One approach of selecting a subset of variables we're interested in would be to use either the subset() function or the square bracket [ ] operator. But let's see if there's an easier way to do that.

We saw the rename() function from dplyr a couple of weeks ago which we could use for renaming variables. But instead, let's see if we can rename the variables and select the ones we want with a single function instead. The dplyr select() allows us to do just that.

world_data <- select(world_data,

country = cname,

political_stability = wbgi_pse,

latitude = lp_lat_abst,

globalization = dr_ig,

inst_quality = ti_cpi)

Now let's make sure we've got everything we need

head(world_data)

country political_stability latitude globalization

1 Afghanistan -2.5498192 0.3666667 31.46042

2 Albania -0.1913142 0.4555556 58.32265

3 Algeria -1.2624909 0.3111111 52.37114

4 Andorra 1.3064846 0.4700000 NA

5 Angola -0.2163249 0.1366667 44.73296

6 Antigua and Barbuda 0.9319394 0.1892222 48.15911

inst_quality

1 1.4

2 3.3

3 2.9

4 NA

5 1.9

6 NA

The function summary() lets you summarize data sets. We will look at the dataset now. When the dataset is small in the sense that you have few variables (columns) then this is a very good way to get a good overview. It gives you an idea about the level of measurement of the variables and the scale. country, e.g., is a character variable as opposed to a number. Countries do not have any order, so the level of measurement is categorical. If you think about the next variable, political stability, and how one could measure it you know there is an order implicit in the measurement: more or less stability. From there, what you need to know is whether the more or less is ordinal or interval scaled. Checking political_stability you see a range from roughly -3 to 1.5. The variable is numerical and has decimal places. This tells you that the variable is at least interval scaled. You will not see ordinally scaled variables with decimal places. Using these heuristics look at the other variables.

summary(world_data)

country political_stability latitude globalization

Length:193 Min. :-3.10637 Min. :0.0000 Min. :24.35

Class :character 1st Qu.:-0.72686 1st Qu.:0.1444 1st Qu.:45.22

Mode :character Median :-0.01900 Median :0.2444 Median :54.99

Mean :-0.06079 Mean :0.2865 Mean :57.15

3rd Qu.: 0.78486 3rd Qu.:0.4444 3rd Qu.:68.34

Max. : 1.57240 Max. :0.7222 Max. :92.30

NA's :12 NA's :12

inst_quality

Min. :1.010

1st Qu.:2.400

Median :3.300

Mean :3.988

3rd Qu.:5.100

Max. :9.300

NA's :12

The variables latitude, globalization and inst_quality have 12 missing values each marked as NA. Missing values coule cause trouble because algebraic operations including an NA will produce NA as a result (e.g.: 1 + NA = NA). We will drop these missing values from our data set now using the filter function.

world_data <- filter(world_data,

!is.na(latitude),

!is.na(globalization),

!is.na(inst_quality))

There are numerous ways to drop observations with missing values. The method shown above is one of the safest ways to do that since you're only dropping observations where the 3 variables we care about (latitude, globalization and inst_quality) are missing.

summary(world_data)

country political_stability latitude globalization

Length:170 Min. :-2.67338 Min. :0.0000 Min. :25.46

Class :character 1st Qu.:-0.79223 1st Qu.:0.1386 1st Qu.:46.05

Mode :character Median :-0.03174 Median :0.2500 Median :55.87

Mean :-0.12018 Mean :0.2865 Mean :57.93

3rd Qu.: 0.66968 3rd Qu.:0.4444 3rd Qu.:69.02

Max. : 1.48047 Max. :0.7222 Max. :92.30

inst_quality

Min. :1.400

1st Qu.:2.500

Median :3.300

Mean :4.050

3rd Qu.:5.175

Max. :9.300

Let's look at the output of summary(world_data) again and check the range of the variable latitude. It is between 0 and 1. The codebook clarifies that the latitude of a country's capital has been divided by 90 to get a variable that ranges from 0 to 1. This would make interpretation difficult. When interpreting the effect of such a variable a unit change (a change of 1) covers the entire range or put differently, it is a change from a country at the equator to a country at one of the poles.

We therefore multiply by 90 again. This will turn the units of the latitude variable into degrees again which makes interpretation easier.

# transform latitude variable

world_data$latitude <- world_data$latitude * 90

Estimating a Bivariate Regression

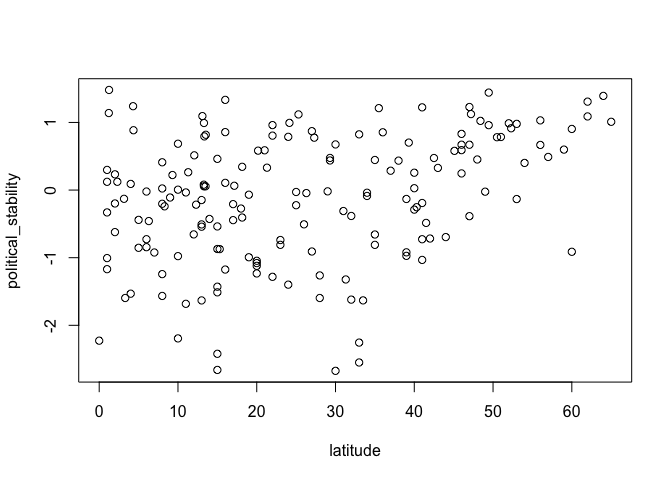

Is there a correlation between the distance of a country to the equator and the level of political stability? Both political stability (dependent variable) and distance to the equator (independent variable) are continuous. Therefore, we will get an idea about the relationship using a scatter plot. Looking at the cloud of points suggests that there might be a positive relationship. Positive means, that increasing our independent variable latitude is related to an increase in the dependent variable political_stability (the further from the equator, the more stable).

plot(political_stability ~ latitude, data = world_data)

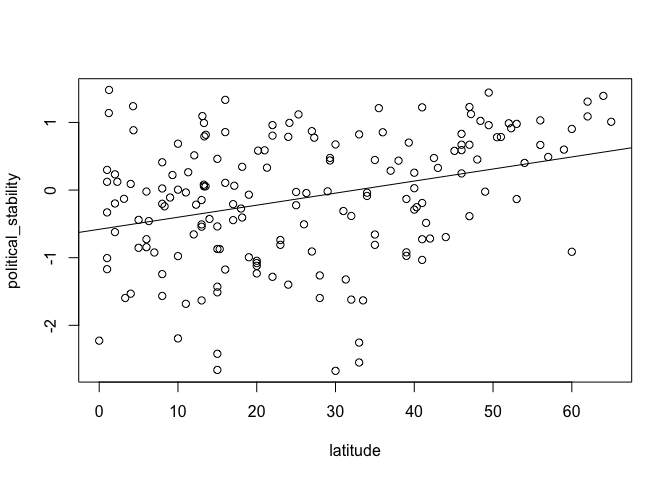

We can fit a line of best fit through the points. To do this we must estimate the bivariate model with the lm() function and then plot the line using the abline() function.

latitude_model <- lm(political_stability ~ latitude, data = world_data)

# add the line

plot(political_stability ~ latitude, data = world_data)

abline(latitude_model)

# regression output

screenreg(latitude_model)

=======================

Model 1

-----------------------

(Intercept) -0.58 ***

(0.12)

latitude 0.02 ***

(0.00)

-----------------------

R^2 0.11

Adj. R^2 0.10

Num. obs. 170

RMSE 0.89

=======================

*** p < 0.001, ** p < 0.01, * p < 0.05

Multivariate Regression

Distance to the equator may not be the best predictor for political stability. This is because there is no causal link between the two. We should think of other variables to include in our model. We will include the index of globalization (higher values mean more integration with the rest of the world) and the quality of institutions. For both we can come up with a causal story for their effect on political stability. Including the two new predictors leads to substantial changes. First, we now explain 49% of the variance of our dependent variable instead of just 11%. Second, the effect of the distance to the equator is no longer significant. Better quality institutions correspond to more political stability. Quality of institutions ranges from 1 to 10. You can estimae the effect of increasing the quality of institutions by 10 percentage points (e.g. from 4 to 5) by dividing 0.34 (the estimate of inst_quality) by the range of the dependent variable. This will give you the change in percentage points of the dependent variable for a 10 percentage points change of inst_quality.

# model with more explanatory variables

inst_model <- lm(political_stability ~ latitude + globalization + inst_quality,

data = world_data)

screenreg(list(latitude_model, inst_model))

=====================================

Model 1 Model 2

-------------------------------------

(Intercept) -0.58 *** -1.25 ***

(0.12) (0.20)

latitude 0.02 *** 0.00

(0.00) (0.00)

globalization -0.00

(0.01)

inst_quality 0.34 ***

(0.04)

-------------------------------------

R^2 0.11 0.50

Adj. R^2 0.10 0.49

Num. obs. 170 170

RMSE 0.89 0.67

=====================================

*** p < 0.001, ** p < 0.01, * p < 0.05

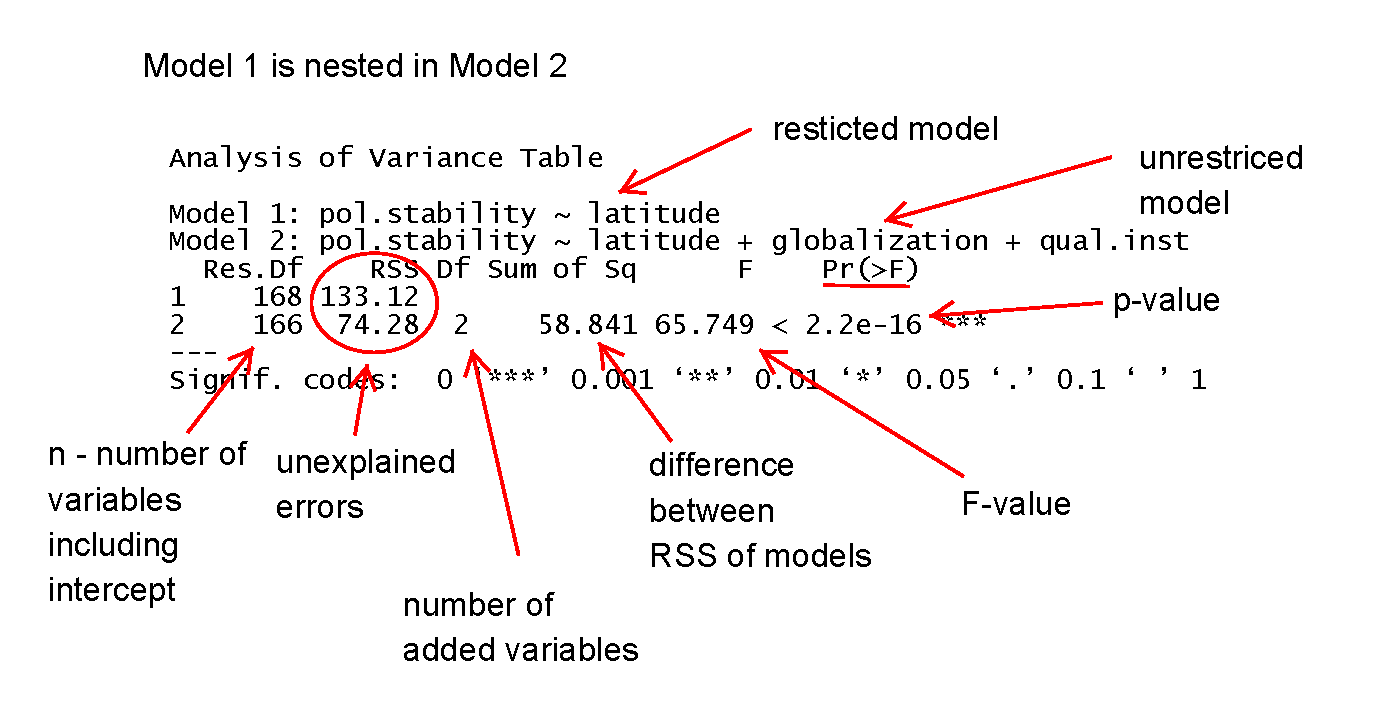

Joint Significance Test (F-statistic)

Whenever you add variables to your model, you will explain more variance in the dependent variable. That means, using your data, your model will better predict outcomes. We would like to know whether the difference (the added explanatory power) is statistically significant. The null hypothesis is that the added explanatory power is zero and the p-value gives us the probability of observing such a difference as the one we actually computed assuming that null hypothesis (no difference) is true.

The F-test is a joint hypothesis test that lets us compute that p-value. Two conditions must be fulfilled to run an F-test:

| Conditions for F-test model comparison |

|---|

Both models must be estimated from the same sample! If your added variables contain lots of missing values and therefore your n (number of observations) are reduced substantially, you are not estimating from the same sample. |

| The models must be nested. That means, the model with more variables must contain all of the variables that are also in the model with fewer variables. |

We specify two models: a restricted model and an unrestricted model. The restricted model is the one with fewer variables. The unrestricted model is the one including the extra variables. We say restricted model because we are "restricting" it to NOT depend on the extra variables. Once we estimated those two models we compare the residual sum of squares (RSS). The RSS is the sum over the squared deviations from the regression line and that is the unexplained error. The restricted model (fewer variables) is always expected to have a larger RSS than the unrestricted model. Notice that this is same as saying: the restricted model (fewer variables) has less explanatory power. We test whether the reduction in the RSS is statistically significant using a distribution called "F distribution". If it is, the added variables are jointly (but not necessarily individually) significant.

anova(latitude_model, inst_model)

Analysis of Variance Table

Model 1: political_stability ~ latitude

Model 2: political_stability ~ latitude + globalization + inst_quality

Res.Df RSS Df Sum of Sq F Pr(>F)

1 168 133.12

2 166 74.28 2 58.841 65.749 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Predicting outcome conditional on institutional quality

To predict and to show our uncertainty, we use zelig() again. We run the same regression as we did in second model. Therefore, our regression output of z.out will be the same as inst_model. The advantage of zelig() is what comes next -- predicting outcomes and presenting the uncertainty attached to our predictions.

We proceed in four steps.

- We estimate the model.

We set the covariates.

- you do not have to set all covariates. E.g.: we are only setting values for the quality of institutions but not for

latitudeorglobalization. Depending on the level of measurement, zelig will automatically set covariate values for the variables where you did not specify values yourself. Continuous variables will be set to their means, ordinal ones to the median, and categorical ones to the mode.

- you do not have to set all covariates. E.g.: we are only setting values for the quality of institutions but not for

We simulate.

- We plot the results.

# multivariate regression model

z.out <- zelig(political_stability ~ latitude + globalization + inst_quality,

data = world_data,

model = "ls")

How to cite this model in Zelig:

R Core Team. 2007.

ls: Least Squares Regression for Continuous Dependent Variables

in Christine Choirat, James Honaker, Kosuke Imai, Gary King, and Olivia Lau,

"Zelig: Everyone's Statistical Software," http://zeligproject.org/

# setting covariates

x.out <- setx(z.out, inst_quality = seq(0, 10, 1))

# simulation

s.out <- sim(z.out, x = x.out)

# plot results

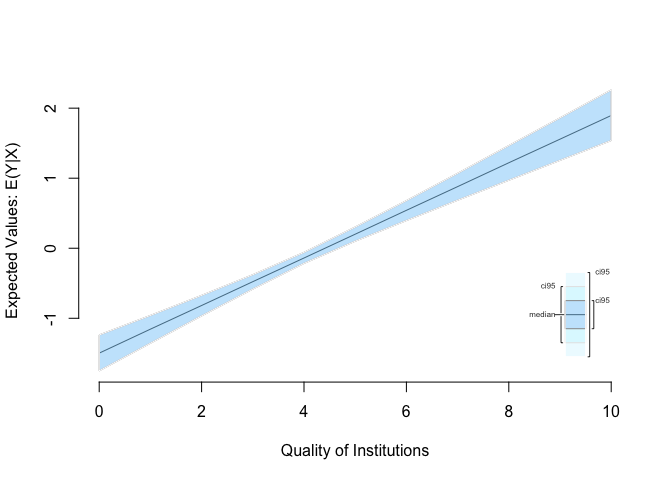

ci.plot(s.out, ci = 95, xlab = "Quality of Institutions")

Sometimes, you want to compare two groups. We could be interested in comparing countries with low institutional quality to countries with high institutional quality with respect to the effect on political stability. You can easily do this, using zelig(). We construct a hypothetical case. Specifically, what happens if you move from the 25th percentile of institutional quality to the 75th percentile of institutional quality?

# 25th percentile of quality of institutions

x.out.low <- setx(z.out, inst_quality = quantile(world_data$inst_quality, 0.25))

# 75th percentile of quality of institutions

x.out.high <- setx(z.out, inst_quality = quantile(world_data$inst_quality, 0.75))

# simulation

s.out <- sim(z.out, x = x.out.low, x1 = x.out.high)

# summary output

summary(s.out)

sim x :

-----

ev

mean sd 50% 2.5% 97.5%

1 -0.6438257 0.07785633 -0.6432758 -0.7978783 -0.4897753

pv

mean sd 50% 2.5% 97.5%

[1,] -0.6478814 0.6757176 -0.632777 -1.985262 0.6475358

sim x1 :

-----

ev

mean sd 50% 2.5% 97.5%

1 0.2621608 0.06631052 0.2669434 0.1364416 0.3965021

pv

mean sd 50% 2.5% 97.5%

[1,] 0.2673095 0.6772118 0.2562177 -0.9940522 1.604387

fd

mean sd 50% 2.5% 97.5%

1 0.9059866 0.10136 0.9094082 0.706925 1.106945

When we summarise results from simulations done by zelig() it always shows predicted and expected values. Lets get to the interpretation of our results using both plot and the numbers from the summary. In the sim() function we specified that x is the low quality of institutions case and x1 is the high quality of institutions case. Our dependent variable is political stability. We expect a mean level of political stability of -0.644 for the low quality of institutions case. You get this number from the output of summary(s.out) under the ev section which stands for expected values. Similarly, we expect a mean level of political stability for the high institutional quality case of 0.262. When you want to know whether the difference between the two groups (low quality institutions & high quality institutions) is significantly different from zero (statistically significant) you look at the first difference. The mean first difference is 0.906. Looking at the 95% confidence level the difference is between 0.707 and 1.107. This interval does not include zero, you can reject the null hypothesis that the difference is zero.

Additional Resources

Exercises

Download the

corruption.csvfrom the link below and read it in R.Inspect the data. Spending some time here will help you for the next tasks. Knowing and understanding your data will help you when running and interpreting models and you are less prone to making mistakes.

Run a regression on

gdp. Useti.cpi(corruption perceptions index, larger values mean less corruption) andregionas independent variables. Print the model results to a word file called model1.doc. Also, print your output to the screen.- Spoiler (this will make sense when looking at the regression output): Region is a categorical variable and R recognizes it as a factor variable. The variable has four categories corresponding to the four regions. When you run the model, the regions are included as binary variables (also sometimes called dummies) that take the values 0 or 1. For example,

regionAmericasis 1 when the observation (the country) is from the Americas. Only three regions are included in the model, while the fourth is what we call the baseline or reference category. It is included in the intercept. Interpreting the intercept would mean, looking at a hypothetical African country whereti.cpiis zero. If the estimate for the region dummy Europe is significant then that means that there is a difference in the dependent variable between European countries and the baseline category Africa.

- Spoiler (this will make sense when looking at the regression output): Region is a categorical variable and R recognizes it as a factor variable. The variable has four categories corresponding to the four regions. When you run the model, the regions are included as binary variables (also sometimes called dummies) that take the values 0 or 1. For example,

Does the inclusion of region improve model fit compared to a model without regions but with the corruption perceptions index? Hint: You need to compare two models.

- Predict

gdpby varyingti_cpifrom lowest to highest using the model that includesregion. Plot your results. - Is there a difference between Europe and the Americas? Hint: is the difference between the predicted values for the two groups significantly different from zero?