Mastr captcha

Funa Ye + Kate Rogers

click ↑

The CAPTCHA test determines whether a user is human by generating images that are difficult for non-human intelligence, i.e. ‘bots’ or computer programs, to determine. We assume we are different from robots in our ability to love, empathise and hold morals, but are we only known by the system for being better at recognising traffic lights, school buses and fire hydrants in pictures? The number of machine-generated images is gradually increasing on the web, does it follow that humans may not be able to understand the logic of a robot's images?

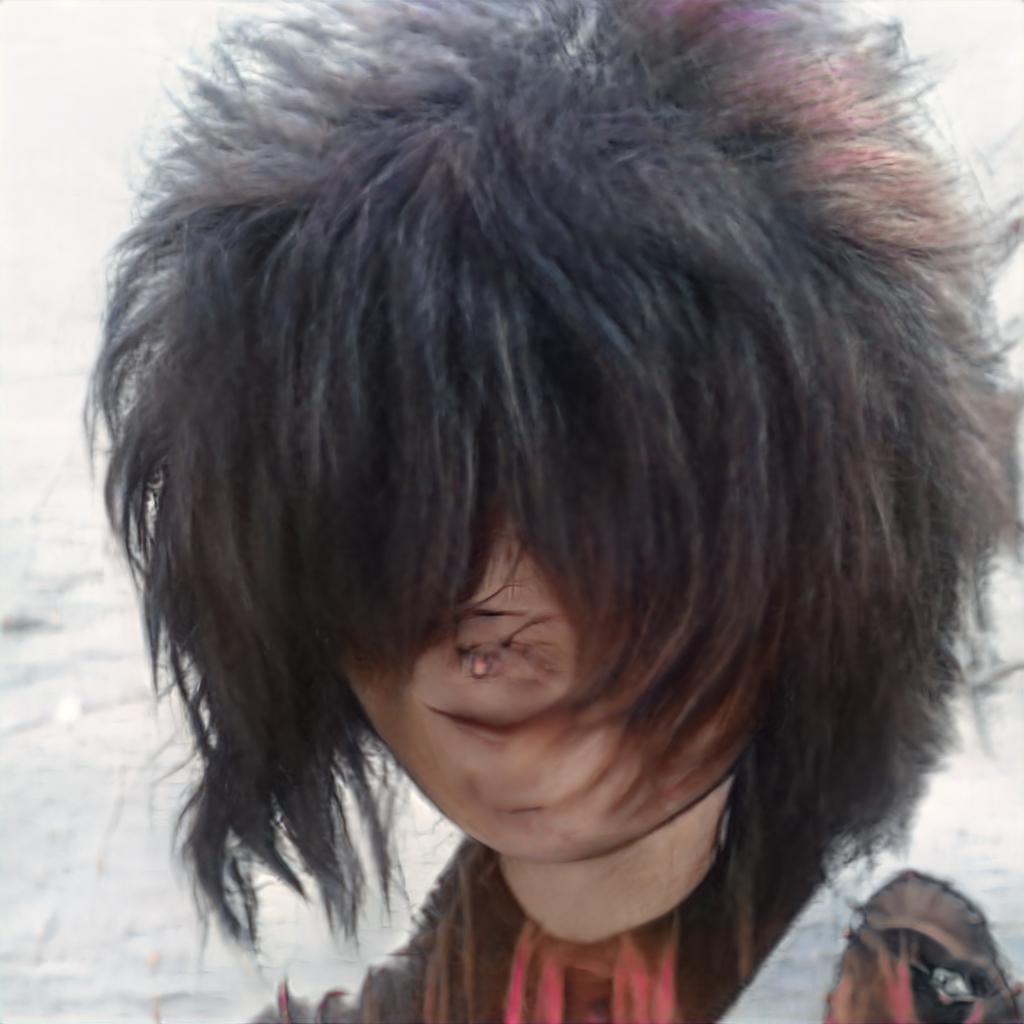

The Smart were filtered out from their places of refuge on the web by the anti-Smart. The anti-Smart gained access to groups through falsely identifying as the Smart themselves, and once in, through auto-spamming, account blocking and general consistent abuse, the anti-Smart corrupted the spaces, driving the Smart from the online communities.

The abuse was maintained off-line through physical attacks and anti-Smart propaganda. The Smart, for their safety, cut their hair and took to ‘flat’ hairstyles, or wigs that they could remove if needed.

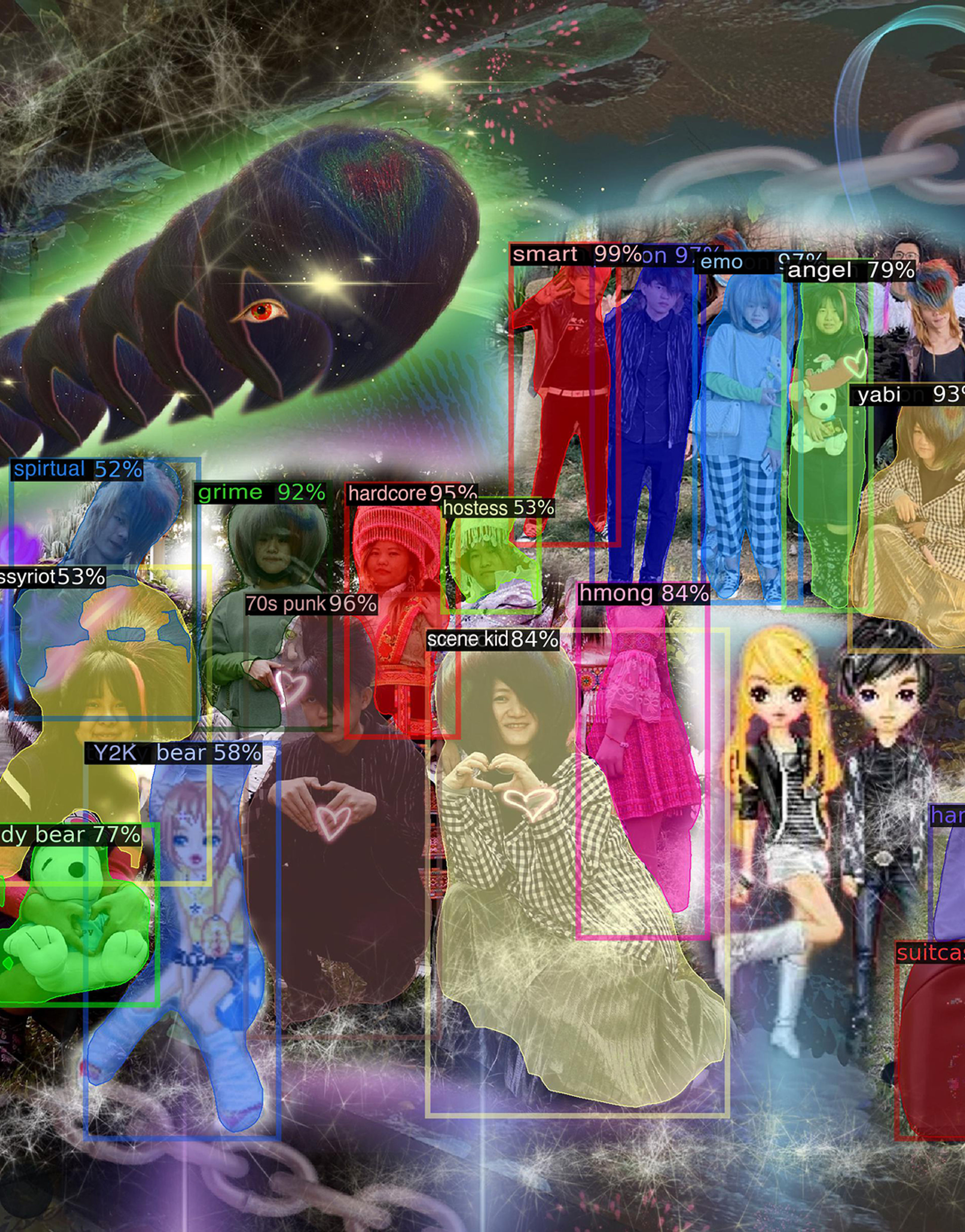

@beYourOwnMastr bot aims to contaminate the filtered space. The Smart were filtered out, in real life and online. Humans failed to recognise correctly. @beYourOwnMastr bot uses generative models to access data sets and reconnect to traces of Smart. Through Twitter, the bot will republicise Smart voices in the public domain.

Some accompanying text about the twitter feed.

beYourOwnMastr bots’ content is the output from the GPT-2 language model trained on a dataset of Smart interview transcriptions by Ye and Rogers. This output is interspersed with scripts translated by hwcha Martian to Chinese translator, DeepL Translate, Google translate and edited by Funa Ye and Kate Rogers.

Text to image generation via generative adversarial networks through Sudowrite, wenxin Yige, Dall-e and RunwayML.

Sudowrite bases its responses on OpenAI trained NLP model GPT-3 and extra training from scraped ‘large parts’ of the internet. DeepL uses artificial neural networks trained using the supervised training method on “many millions of translated texts” found by specially trained crawlers. They do not disclose what models they use. Google Translate explains its process as statistical machine translation, trained on millions of documents that have already been translated by human translators and sourced from organizations like the UN and websites from all around the world. Language models trained through RunwayML use as their base the OpenAI trained NLP model GPT-2.