Using Virtual Reality to simulate the effects of a new mode of police surveillance

18 November 2022

Trust and legitimacy are essential to a functioning legal system. To secure legal compliance, police need to demonstrate that they are a moral, just and appropriate institution that has the right to enforce the law. When people willingly abide by the law, this reduces the need for costly and minimally efficient modes of policing based on force, deterrence and intrusive surveillance.

Yet, AI and new technologies are increasingly being used by the police, raising issues of fairness, ethics and privacy. When police use drones to turn everyday situations into moments of surveillance, does this damage trust and legitimacy?

In this project we use Virtual Reality (VR) to study the impact of police drones in the context of policing and public health. Placing people in an everyday urban landscape in which they are stopped and scanned by a drone (for routine policing or for COVID-19 surveillance), we test a psychological theory about the subjective experience of procedural justice and legitimation in the context of the use of surveillance technology.

On the one hand, we assess whether an interaction with a police drone increases or decreases public trust and police legitimacy. On the other hand, we test whether the effects of the surveillance encounter are shaped by the experienced fairness of decision-making and interpersonal treatment.

One defining feature of this ambitious social science study is the photo-realistic quality of the experience; another is the use of Steam and directed advertising to recruit research participants at scale in the US and UK.

- The technical backdrop

London School of Economics (LSE), University College London (UCL) engaged with Enosis VR, a company based in California, to develop a breakthrough VR application with a sophisticated backend system to assist social psychologies to run VR studies at scale. Developing VR is costly and requires advanced knowledge of game engines, 3D modeling and rendering pipelines, software development, and optimization techniques. Since the goal of this breakthrough psychological VR experiment was to include as many participants as possible, our benchmark for performance was to develop and optimize for the 2014 Nvidia 980 graphics card, the first VR-enabled GPU on the market. A high level of realism is required to engage the user, allowing them to embody the experience and engage with the police drone interactions. To develop the application, we used Unreal Engine (v4.25) rather than Unity 3D because Unreal has a better lighting and shading system and more advanced rendering optimizations, ensuring visual quality and performance.

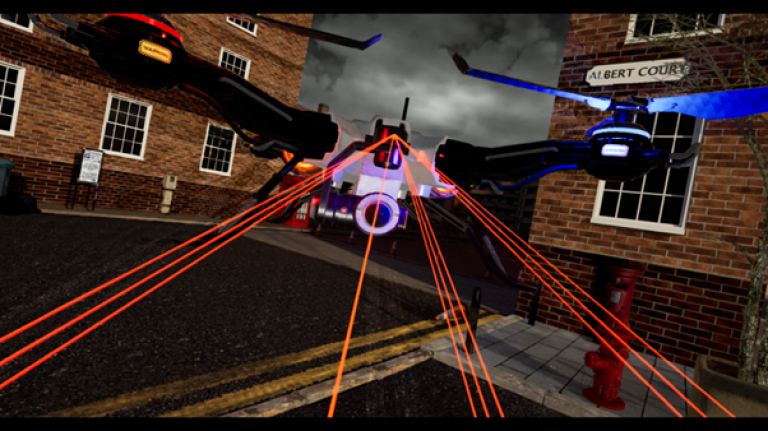

Our highly optimized 3D modeling and rendering pipeline began with a detailed Design Document with reference images from a specific site in London, as seen in Fig 1. and specific targets for geometry complexity, polygon count, and texture sizes.

Working with a team of technical artists from Los Angeles, California, we reproduced two scenes in full detail, one resembling a UK city and another resembling a US city, both using the industry’s standard 3D modeling software, Maya 2019. Every aspect of the world was referenced and reproduced in full 3D detail. We used professional cinematic rendering tools to pre-visualize and finetune our environment. Of course, no game engine could render such a detailed model in real-time. Our technical artists wrote custom exporters in Python to allow us to export each referenced building, car, object, and so on from the original hi-res scene with baked light and shadows directly into the textures. This meant that the scene could carry all the original qualities but at a fragment of complexity. To further optimize, the scripts could specify the level of detail (LODs) of each asset related to the starting position of the user so that the final quality and resolution of the textures were taking distance into account. We then imported the scene in the Unreal Engine under an XML-based hierarchical reference and 4K quality textures. To increase immersion in the scene, all buildings, cars, props, and so forth that were within the user’s reach, which we commonly refer to as the user’s near field, were imported as 3D models with optimized geometry, Physically Based Rendering materials, and detailed photoreal textures, all of which allowed a fully stereoscopic effect.

Finally, we used Unreal’s reflection probes system to provide an additional layer of realism and render a layer of reflectivity on surfaces near the user, such as cars, windows, painted objects, etc., so they seem to respond dynamically to the user's head movement. There are 16 unique scenarios, and each participant is randomly selected to go over one. Some depict a fully autonomous drone, and others a remote-controlled one from the local police headquarters. Some interactions are respectful, and others are rude and aggressive. Finally, some are related to crime, and others to monitoring public health, redolent of the recent COVID-19 pandemic.

An important engineering aspect of the project was developing an AI model for auto-navigating the drone. According to this model, the drone is constantly trying to be in front of the user and at eye height while its camera tracks the user. The drone always keeps a safe distance from the user, and if the user tries to assault it, that distance increases. The combination of blocking the user’s volume and area, defining the allowed navmesh, and developing collision detection with the objects and props in the scene into the AI navigation model allowed the fine-tuning of the drone’s movement within the scene and reaching a believable level of realism in its navigation system.

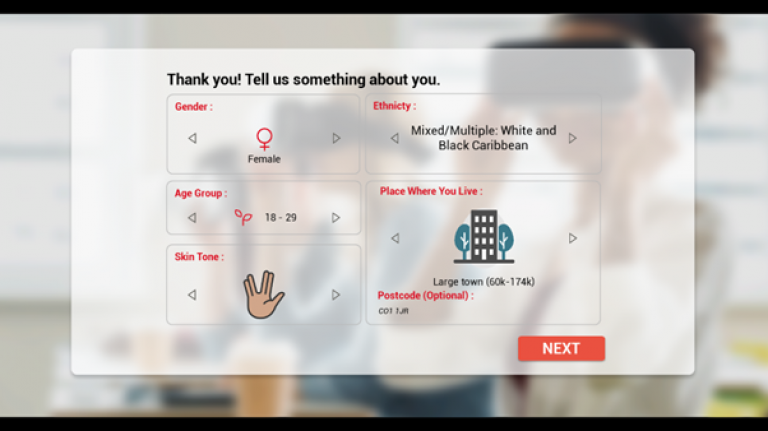

The quality of every scientific study relies on a sophisticated method for developing questionnaires and collecting and analyzing data from responses. While in the future, we expect powerful survey platforms like Qualys to provide native integration with VR/AR authoring platforms, unfortunately, there is no simple solution to date to bring basic capabilities for authoring, distributing, and collecting data from questionnaires integrated into VR experiences. Therefore in anticipation of thousands of user responses worldwide, we developed a backend architecture based on Google Firebase that offers a lot of core Qualys capabilities to psychologists to develop multiple studies and publish them with the VR main application framework.

Different databases can be assigned to different instances of the VR application using a unique code as the researchers develop online and offline (site-specific) studies. For simplicity, we developed the user interface for our backend system and for authoring the questionnaires in Retool, providing researchers with all basic and advanced functionality for authoring studies and developing questionnaires with multiple constructs and types of responses. We developed a sophisticated system to compute different methods for selecting constructs and questions based on random weighted distribution, series, and rules and sync them with the front-end VR application. Researchers can set initial weights for the distributions of their constructs in a questionnaire and monitor responses in the database in real-time. Based on these analytics, they can choose to adjust the distribution weights on the fly and in real-time to secure the collection of data with certain priority as their study is evolving. The database performs automatic calculations on the responses fed to the front-end VR application so users can see how their responses compare with the responses of other participants after completing the questionnaire.

We protect users’ personal behavioral and physiological data. All demographic data collection in the study is on a volunteering basis, and users choose to self-report their age, ethnicity, race, skin color, and sexual orientation, as well as the size and area of where they live. All input is anonymized, and the database is kept secure under standard user account authentication and Google cloud security for accessing the data. Virtual Reality is a fully synthesized and controlled experience that can harvest a magnitude of additional behavioral information from users. As an indication, we outline the total time that the user makes eye contact with the drone, any attempt to hit the drone, the level of compliance with the requests of the police drone, the response time to filling the questionnaires, etc. At this point, the only additional information we harvest is any attempt to hit the drone.

London School of Economics, University College London, and Enosis VR stand at the forefront of innovation in leveraging technology to perform large-scale cutting-edge psychological experiments.

- Screenshots from within the VR environment

- Data collection screenshots

Close

Close