Social robots for autism education

Developing a robot-assisted intervention programme for emotion teaching, powered by artificial intelligence

6 December 2017

By Dr Alyssa M. Alcorn.

Autistic children frequently have difficulties in understanding and using social and emotional cues, and researchers agree that targeting these developmental “building blocks” can have broad, long-term benefits.

Existing research has shown promise in using robot-assisted interventions for teaching social and academic skills to autistic children, including emotion recognition. Most of this work has focused on older children in mainstream settings, and has not addressed young children or wider range of cognitive and language abilities. Given claims that robot-assisted interventions could present lower, less complex social demands than human-led interventions, it is particularly important to investigate their feasibility for children whose social, daily life, or language skills may present barriers to participation in “traditional” interventions.

As part of a European-wide consortium, researchers from IOE have contributed expertise in autism and psychology to designing this new programme and are leading its evaluation with autistic children and special schools.

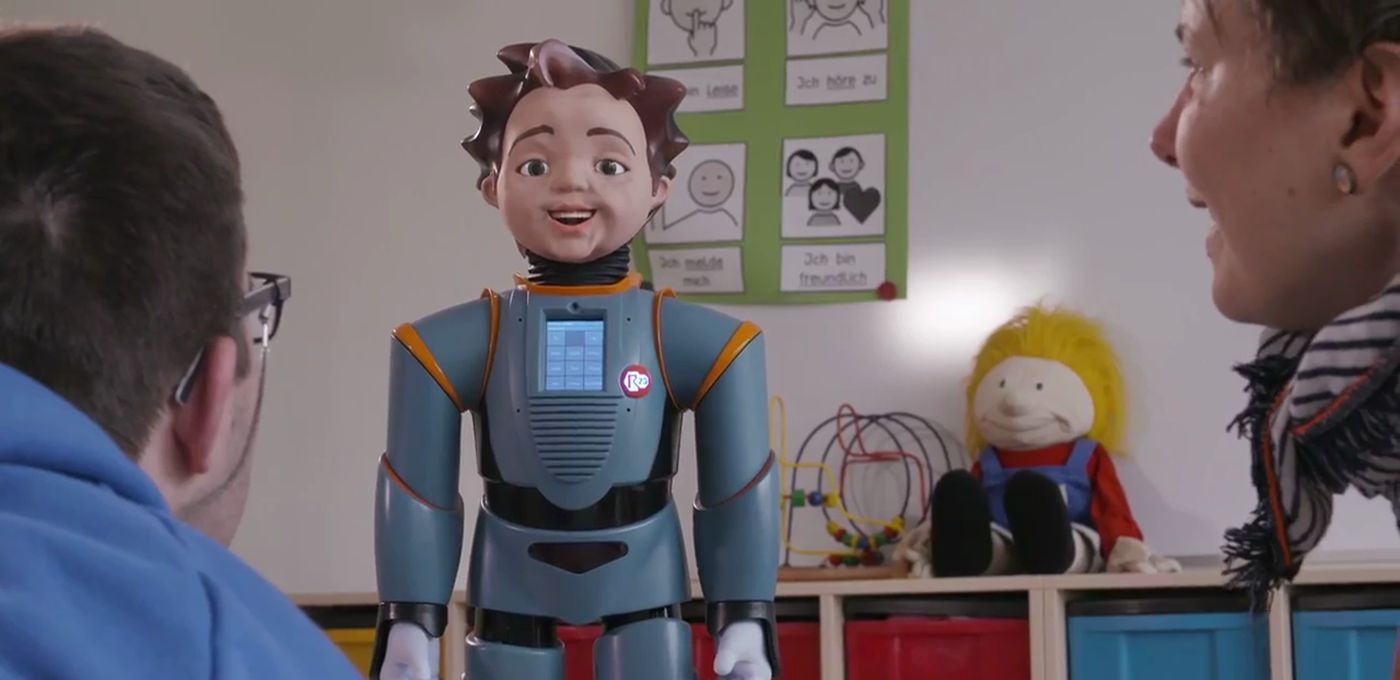

Hello, Zeno

66 school-aged autistic children from 3 London special schools have participated so far, with an additional 66 children participating in Belgrade. Half were randomly assigned to participate in an emotion recognition teaching programme led by an adult, and half assigned to the same programme assisted by Zeno the robot. The majority of our participants had intellectual disabilities and language issues in addition to autism.

Next steps

Results and insights from first round of DE-ENIGMA robot studies, teacher interviews, and classroom observations have encouraged us to re-shape the emotion teaching programme away from “one size fits all” direct instruction, and toward smaller, flexible, more game-like “modules”.

IOE’s DE-ENIGMA team is currently preparing the results of these initial studies, comparing the performance of children in the robot-led and adult-led conditions, as well as making cross-cultural comparisons between Serbia and the UK. Classroom observations and interviews conducted with educators in London special schools provide additional, qualitative context for this experimental work, and have helped to identify teaching and interaction strategies that can be adapted for Zeno the robot’s future activities.

Initial results at this stage suggest that many aspects of the robot-assisted programme were highly successful in both the UK and Serbia, particularly in term of fostering children’s interest and engagement in the emotion activities.

The IOE team is collaborating closely with the University of Twente on these new teaching activities, which will be enabled by multi-modal artificial intelligence developed by the DE-ENIGMA technical teams. Over several rounds of development, Zeno will be equipped to process audio, video, and gesture input in real time, and autonomously plan interactions with a child user.

By 2019, DE-ENIGMA will have advanced, state-of-the art functionality for analysing speech, faces, and motion in real time—all under messy, real-world conditions. In the final version of the DE-ENIGMA system, Zeno the robot will lead a novel, intelligent emotion-teaching programme shaped by autism research and teacher input, which will be evaluated in a randomised control trial.

Another thing...

A parallel output for this project will be the DE-ENIGMA database, a multi-modal online database of audio, video, and gesture data from child participants, with many behaviours already labelled by autism experts. So far, it includes 121 British and Serbian children, representing 152 hours of interaction and ~13 terabytes of data. It will be the largest existing dataset of its kind—available free to academic researchers worldwide from 2018. It represents a rich resource for research questions about autism, including as child-robot or child-adult interactions, emotion recognition, social and communicative behaviours, and cross-cultural comparisons. It also provides the required scale of data needed for furthering machine learning, computer vision, audio processing and other technical techniques that aim to represent and adapt to autistic behaviours.

Close

Close