The Institute of Healthcare Engineering at New Scientist Live 2017

8 January 2018

Last month our team headed to London ExCel to share some of our research with attendees of New Scientist Live (28 September – 01 October 2017).

With approx. 3,000 visitors to our stall over 4 busy days, our team were inspired by the diversity of questions and the interest shown.

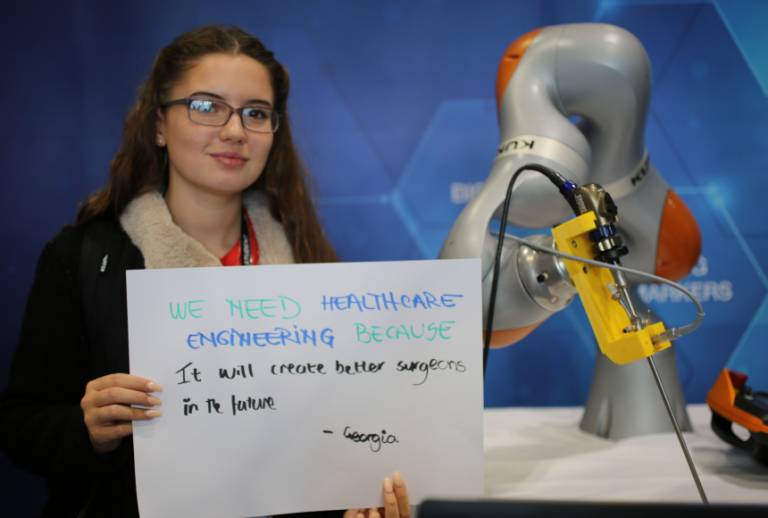

The central piece of our exhibit was a robotic Kuka arm that is being developed to assist surgical interventions. The robot was programmed to perform pre-defined motions over a phantom placenta and had been adapted to hold an endoscope. The magnified images from the endoscope’s camera were displayed on a screen to give visitors an indication of the surgeon’s viewpoint, which is restricted by the small access port during minimally invasive surgery.

The robotic arm created a talking point from which we discussed some of our research developments around robotic assisted imaging. In particular, we highlighted the GIFT-Surg project to advance fetal surgery and described the intervention for twin-to-twin transfusion syndrome (TTTS) which the adapted arm and endoscope is being developed for. The robot plays a key role in motion stabilisation, allowing for micro-precise navigation and aiming to minimize the risk of such a complex surgical procedure. To find out more about this research, please read our article about surgical robots and robotic assisted imaging.

One of the most popular highlights on our stall was a pair of demonstrations that allowed visitors to try their hand at being a surgeon. Participants used specially adapted Wii controllers built into a laparoscopic set up to play a video game. This mimicked the same sensitive and precise movements required to perform minimally invasive surgery, with visitors frequently commenting how surprised they were to feel just how delicate the motor skills required are.

Once participants had mastered that training tool, they were able to try out their surgical skills using an advanced simulation of a hip replacement operation. The simulation incorporated the use of a haptic robot with a stylus, which visitors held in place of a surgical device. This provided incredibly realistic haptic feedback of the hip being displayed on screen, including tiny ridges and grooves along the bone. This was combined with an augmented reality (AR) headset to enhance the immersive experience.

Visitors were then able to find out more about the research linked to this; they learnt how researchers are developing the simulator to incorporate patient specific data and re-create exact replicas of patients’ hip bones to assist with pre-operative planning. This aims to minimize the risk to patients by allowing surgeons to practice the procedure before surgery and pre-empt scenarios as they might actually arise. Further details about this project and related research can be found in our research piece on surgical rehabilitation.

Our next demonstration explained how imaging biomarkers can help to detect and diagnose neurological conditions, such as Alzheimer’s Disease (AD). To help visitors explore this question we displayed 3 scenarios which each represented a different stage of disease progression; a control model, a brain showing mild cognitive impairment and a brain with advanced AD.

To aid visitors in guessing which brain aligns with which diagnosis and why these correlate, we had MRI scans, clinical reports and 3D printed life-size models of the brains. We found that the phantom brains really helped younger visitors to visualise the scenarios and also served as a tool to show the modern capabilities of 3D printing.

This demonstration led on to further discussion about the impact quantitative analysis can have in enabling accurate early diagnosis of AD and, therefore, enhancing patients’ quality of life and independence whilst living with the disease. We particularly highlighted software being developed that is able to automatically derive robust, objective measurements from clinical neuro-images, as shown in the clinical reports displayed and which you can find out more about in our article on how imaging biomarkers are used in healthcare research.

Our final interactive demonstration was a virtual reality (VR) headset that simulated what it was like to live with different sight loss conditions, which affect almost 2 million people in the UK alone. This technology allowed visitors to virtually go through common scenarios such as crossing the road or a trip to the supermarket, but with 5 different settings which each showed the viewpoint of a different visual impairment. By describing to our team on the stall what they could see and how their sight was impaired, we were able to tell them which eye disease this corresponded to.

We then used this ‘diagnosis’ to discuss the implications of that disease and provide information about research being developing to tackle it within the Institute. Some of this research and related projects are looked at in our article about how engineering technologies are being used to overcome clinical challenges in ophthalmology.

Our time at New Scientist Live was also an opportunity for us to explore the rest of the festival and uncover the best ideas and discoveries taking place in other scientific fields. It was the second annual New Scientist Live to take place and the event had grown to feature over 120 speakers and 60 exhibitions across 5 immersive zones: Cosmos, Earth, Humans, Technology and Engineering. The event attracted over 30,400 attendees.

We were situated in between the Humans and Technology zones which resulted in many interesting discussions with neighbouring exhibitors, exchanging ideas with them about artificial intelligence (AI), the fragility of memory, genome sequencing, progress in cancer treatment, the newest wearable technologies and more. Highlights of the speakers at New Scientist Live included Tim Peake and other astronauts describing their travels in space, the sci-fi author Margaret Atwood revealing how scientific theories have informed her work and the founder of DeepMind mapping out his thoughts on the future of AI.

From healthcare professionals to amateur enthusiasts there was certainly no typical audience member, but everyone we met seemed to be united by a sharp curiosity to find out more. We were particularly impressed by the young generation of budding engineers and scientists that we met, including the 15 year-old boy who handed us a business card declaring himself a ‘problem solver’ and ‘perfectionist’ and the 3 sisters who neatly filled up an entire notebook page with the detailed facts that they had learnt about the brain from our stall.

We would like to thank everyone that came to visit us at the festival and asked us so many perceptive and stimulating questions. We look forward to staying in touch; please sign up to our monthly newsletter here or see us at our next exhibition at Bloomsbury Festival on Saturday 21 October 2017.

To see more of our photos from New Scientist Live, take a look at our Facebook album or engage with our #ScienceThatHeals campaign on twitter.

Close

Close