Conversation Piece

Conversation Piece ISEA 09, Ormeau Baths Gallery, Belfast

Conversation Piece was developed over a four-year period at University College London in collaboration with The Centre for Speech Technology Research, University of Edinburgh.

Work in Progress, EAR Institute, University College London, December 2006

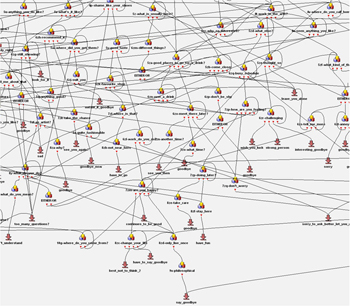

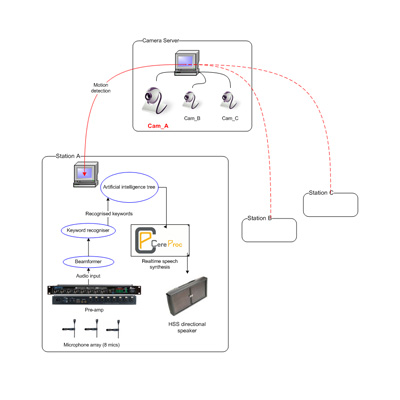

A real-time video detection system uses an adaptive background mask to track movement within the fields of view of two cameras, thus noting the approach of a visitor and triggering the room's initial statement. The voice of the room ('Heather')is generated using the Cereproc Speech synthesis engine CereVoice, which allows 'Heather's' spoken responses to be generated 'on the fly', without pre-recording. The responses are delivered by HyperSonic Sound technology speakers, which use ultrasonic transducers to 'beam' sound in a particular direction. Once the room's opening comment has been made, the conversation is directed by a 'conversation tree', which consists of nodes that represent the statements the room can make connected via a series of branches. Which branch the system takes at each node is determined by listening for certain keywords in the user’s response, and taking the branch associated with that particular keyword.

![]()

One of the design criteria for the system was that the interface be completely transparent to the user. This poses constraints on the audio capture system for the keyword spotter. Such systems traditionally work best when the user wears a close talking microphone, however in this application this is neither practical, nor transparent. Instead, we developed a real-time microphone array beamforming system - eight microphones are embedded in the display plinths and are subsequently processed to 'listen' in the direction of the person standing at the plinth. The beamformer, running as a VST plugin, implements a superdirective beamformer directed at the target speaker with a post filter which cancels sounds from beams directed at the other plinths. The Keyword spotter is based on the ATK real-time extension to the HTK speech recognition system. A finite state language model comprising the keywords to be spotted in parallel with a monophone garbage model is used for OOV word rejection, with the keywords updated each time the system moves to a different node in the conversation tree.

system design

The Conversation Piece system was developed by:

Alun Evans (UCL); Zhuroan Wang (UCL) and Mike Lincoln (University of Edinburgh)

Concept, design, project management and research by:

Alexa Wright