Touchless Computing with UCL’s MotionInput v2.0 and Microsoft

15 July 2021

UCL Computer Science staff and students have developed new methods for controlling a computer user interface with just a webcam. This work is in collaboration with Microsoft and works with existing software applications and games.

The Covid-19 pandemic period has brought a realm of new use cases for computing interactions. Prior to the vaccine programme, how users touch equipment, keyboard, mice and computer interfaces were particularly of concern.

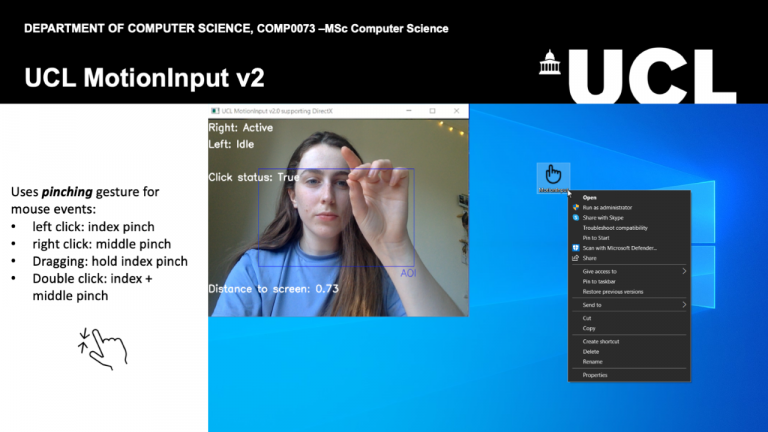

Recent advancements in gesture recognition technology, computer vision and machine learning open up a world of new opportunities for touchless computing interactions. UCL’s MotionInput supporting DirectX v2.0 is the second iteration of our Windows based library. This uses several open-source and federated on-device machine learning models, meaning that it is privacy safe in recognising users. It captures and analyses interactions and converts them into mouse and keyboard signals for the operating system to make use of in its native user interface. This enables the full use of a user interface through hand gestures, body poses, head movement, and eye tracking to manipulate a computer, with only a standard existing webcam.

Touchless technologies allow users to interact with and control computer interfaces without any form of physical input, and instead by using gesture and voice commands. As part of this, gesture recognition technologies interpret human movements, gestures and behaviours through computer vision and machine learning. However, traditionally these gesture control technologies used dedicated depth cameras such as the Kinect camera and/or highly closed development platforms such as EyeToy. As such they remain largely unexplored for general public use outside of the video gaming industry.

By using a different approach, one that uses a webcam and open-source machine learning libraries, MotionInput is able to identify classifications of human activity and convey it as fast as possible as inputs to your existing software, including your browser, existing games and applications.

MotionInput supporting DirectX holds the potential to transform how we navigate computer user interfaces. For example, we can

play our existing computer games through exercise

create new art and performing music by subtle movements, including depth conversion

keep a sterile environment in using hospital systems with responsiveness to highly detailed user interfaces

interact with control panels in industry where safety is important

For accessibility purposes, this technology will allow for independent hands-free navigation of computer interfaces.

Coupled with advances to computer vision, we believe that the market reach of users with webcams could bring gesture recognition software into the mainstream in this new era of touchless computing.

For more information please visit www.motioninput.com

Developed by:

Version 2.0 Authors (MSc Computer Science)

Ali Hassan

Ashild Kummen

Chenuka Ratwatte

Guanlin Li

Quianying Lu

Robert Shaw

Teodora Ganeva

Yang Zou

Version 1.0 Authors (Final year BSc Computer Science)

Emil Almazov

Lu Han

Supervisors:

Dr. Dean Mohamedally

Dr Graham Roberts

Sheena Visram

Prof Yvonne Rogers

Prof Neil Sebire

Prof Joseph Connor

Dr Nicolas Gold

Lee Stott (Microsoft)

Editorial by Sheena Visram

Close

Close