Jennifer Bizley is a Sir Henry Dale Research Fellow & Professor of Auditory Neuroscience.

Our research seeks to link the patterns of neural activity in auditory cortex to our perception of the world around us. While sounds within an environment, such as a person talking, may be clearly intelligible at their source, noisy and reverberant listening conditions often combine to degrade the intelligibility of the sound wave arriving at the ear. The sound wave that arrives at the ear results from the combination of all of the different sounds that exist in our surroundings. This complex sound wave is decomposed into individual frequency components by the cochlea and transmitted via the auditory nerve to the auditory brain via the auditory nerve. The challenge for the brain is then to separate different sound sources from one another in order that they can be identified or understood - a process also known as auditory scene analysis. The brain must regroup the sound elements that arose from each individual source. Different sources can be desribed according to their characteristics - they might have a particular pitch, or a characteristic timbre. Our research falls into three broad themes:

- How are perceptual features of sounds, such as pitch, timbre or location in space encoded by neurons within auditory cortex?

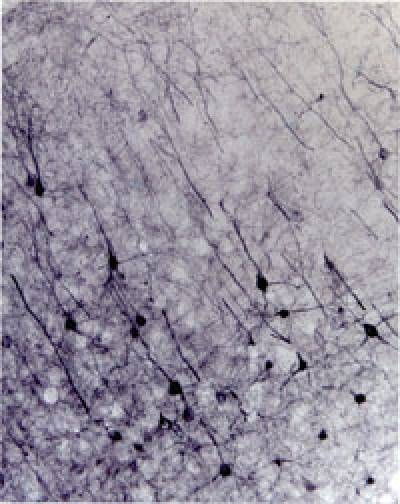

We might expect to see neurons in auditory cortex whose responses match our own perception so that they could perhaps represent a particular sound pitch irrespective of whether it is played by a violin or piano, to our left or right. However, most neurons in auditory cortex represent multiple features of a sound, that is their activity is often modulated by more than one stimulus dimension. Information may be 'multiplexed'

within single neurons since it is possible to extract information about different stimulus parameters from single neurons at different time points in the neural response. Moreover, responses of ensembles of broadly tuned neurons (none of which alone demonstrate invariant

encoding) can be decoded to provide estimates of a stimulus that are robust to changes in irrelevant stimulus features. However, we know little about how sensitivity to particular sound features arises, or what sort of neural representation underlies our perception. Current work is exploring (i) how spectral timbre, which determines vowel identity in speech, is extracted by auditory neurons and (ii) how auditory space and in particular the relative spatial locations of sound sources are represented in auditory cortex.

- How do neurons in auditory cortex create 'invariant' perceptions?

Sound identification - and speech comprehension - require that we are able to generalise across highly variable acoustic stimuli. For example we are able to recognize vowel sounds whether they are spoken by a high or low pitch voice, across accents and genders and in noisy environments. We seek to understand how such invariance, i.e. the ability to maintain perceptual constancy in the face of identity preserving transformations, arises within auditory cortex. We examine neural and behavioural discrimination of sound features - such as spectral timbre - across varied listening conditions including in the presence of noise. By recording populations of neurons across multiple auditory areas we hope to track the emergence of invariant representations.

- When and how does what we see influence how we perceive sound?

We often unconsciously rely on what we see to make sense of what we are hearing (and vice versa). For example, we look at lip movements when we are trying to listen to what someone is saying in a noisy situation.

Traditionally information from different sensory systems, such as the eye and the ear, were thought to be processed independently within the brain. Recent studies have revealed that even at the earliest stages of sensory cortex there is considerable cross-talk between sensory modalities. For example, in the auditory cortex a sizable proportion of nerve cells are sensitive to visual stimulation. Why should neurons in auditory cortex be responding to light? A possible explanation is that visual inputs might increase the spatial acuity of auditory neurons.

Another is that visual inputs might help to parse the auditory scene into its constituent sources.

Our research methods combine human and non-human psychophysics, computational modelling and behavioural neurophysiology. Since attentional state, behavioural context and even the presence of visual stimuli can modulate or drive activity in auditory cortex, we believe that auditory cortical neurons should not be seen simply as static filters tuned to detect particular acoustic features and that visualising neural and behavioural sensitivity simultaneously is key to understanding how neurons in auditory cortex support sound perception.

Funded by

Royal Society, BBSRC, Wellcome Trust, Deafness Research UK

Close

Close